Think of Databricks not just as a platform, but as a masterclass in data engineering best practices. Even if you’re ultimately be using a different service or to craft every line of code yourself, understanding and utilizing Databricks will make you a significantly more effective and efficient engineer.

The Best Practices Blueprint: What Databricks Teaches You

You’ve already hit on some key areas where Databricks shines, and these are fundamental to sound data engineering:

-

- Familiar Notebooks : widely adopted and familiar tool within the data science community due to their interactive and integrated environment.

-

- Data Lakehouse Architecture: Databricks champions the data lakehouse, combining the flexibility and cost effectiveness of data lakes with the ACID transactions and schema enforcement of data warehouses. This isn’t just a buzzword; it’s a best practice for managing diverse data types and ensuring data reliability.

-

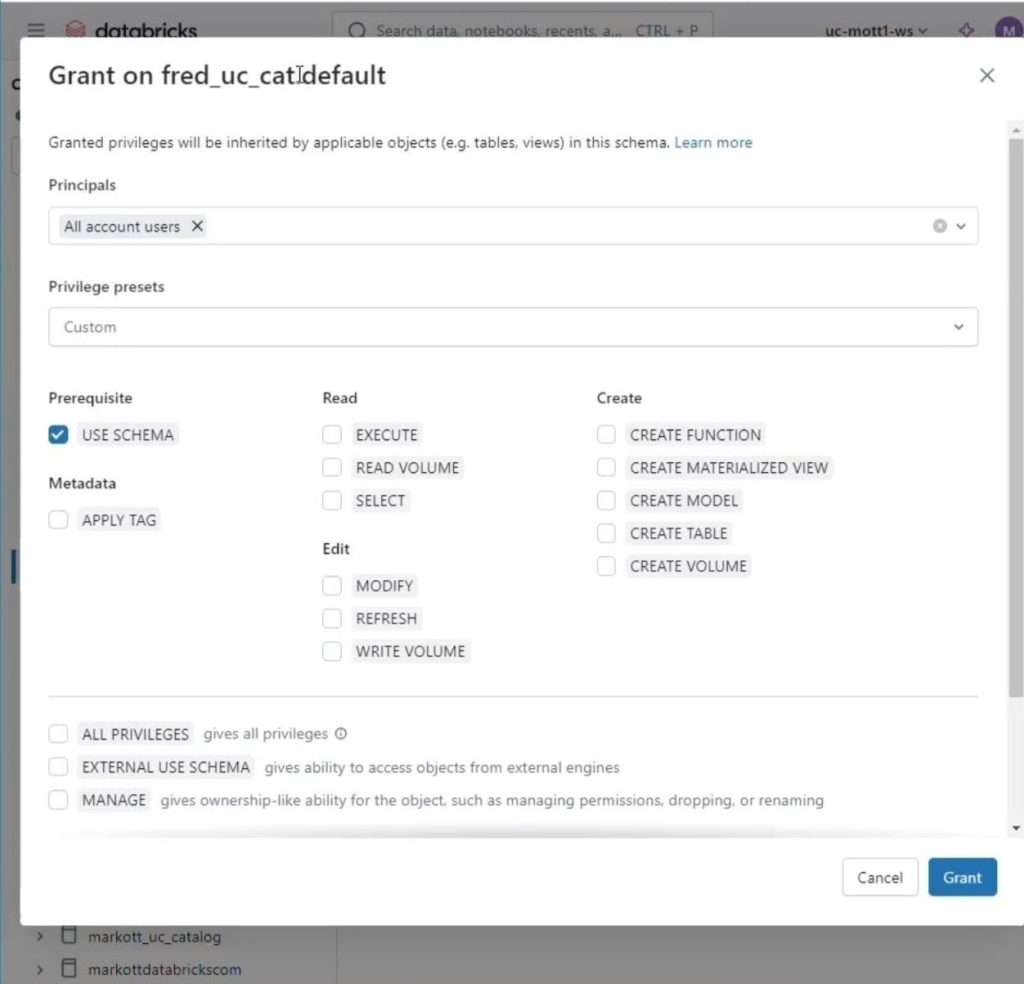

- User, Group, and Permissions Management: Security and access control are paramount. Databricks’ robust mechanisms for managing users, groups, and fine grained permissions teach you the importance of least privilege and secure data access.

-

- Data Governance and Discovery with Unity Catalog: Data isn’t just an asset—it’s a liability, especially if it’s lost, leaked, or not managed securely. This is where governance becomes critical. Databricks’ Unity Catalog is a game changer, providing a centralized metastore and governance layer. It provides data lineage, which traces the journey of data from its source to its destination, allowing you to see exactly how and by whom data was transformed. This instills the discipline of knowing what data you have, where it came from, and how it’s being used. It’s not just about compliance; it’s about making your data truly valuable and secure.

-

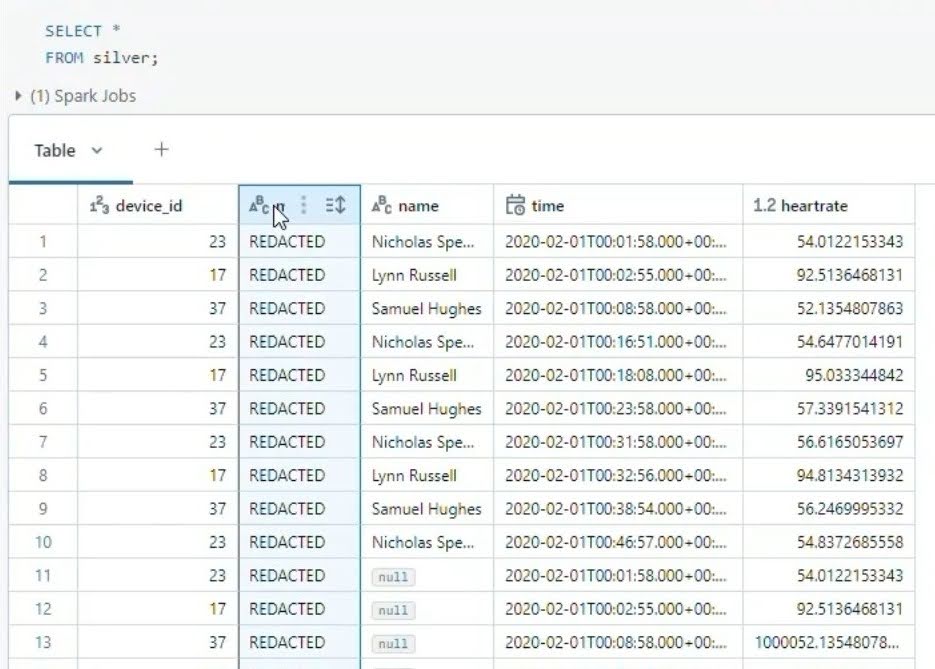

- Masking Sensitive Data: Protecting privacy is non negotiable. Databricks offers features to mask and anonymize sensitive information, guiding you towards building data pipelines that respect privacy by design.

-

- Ingesting Data (Including Streaming Data, CDC, JSON, and Unstructured Data): Databricks’ ability to ingest data from various sources is a core competency, but it truly shines when handling the complexities of data streaming and Change Data Capture (CDC). Streaming data presents unique challenges for engineers—ensuring exactly once semantics, handling late arriving data, and managing stateful operations are all tricky without the right tools. Databricks simplifies this through Structured Streaming, which treats a stream of data as a continuously appending table. Furthermore, its ability to process CDC feeds from databases capturing every insert, update, and delete is a powerful best practice for keeping your data lakehouse perfectly in sync with your source systems.Databricks also excels at handling other data types: JSON and unstructured data like images, audio, or PDFs. Its Auto Loader feature automatically ingests files, infers the schema of semistructured data like JSON, and handles schema evolution gracefully. For truly unstructured data, Databricks simplifies the process of extracting, processing, and preparing this information for analysis, often in a structured JSON format. It’s a masterclass in building fault tolerant, scalable realtime and batch pipelines for any data type.

Beyond the Obvious: More Databricks Wisdom

You’ve touched on the foundations, but Databricks offers even more insights into best practices:

-

- The Best Practices Are Universal: The most important lesson Databricks teaches is that these aren’t just features of one platform—they are enterprise grade best practices adopted by all major cloud data platforms. While the product names may change, the underlying principles are the same. For example:

-

- Unity Catalog vs. AWS Glue Data Catalog and GCP’s Data Catalog: Databricks’ Unity Catalog provides a centralized metadata store and governance layer. On Amazon Web Services, a similar function is handled by the AWS Glue Data Catalog. Google Cloud Platform also offers a service called Data Catalog.

-

- Medallion Architecture Zones: The three layers of the Medallion architecture (Bronze, Silver, Gold) are so fundamental that other platforms use a similar layered approach. On Google Cloud, this concept is often referred to as the Landing Zone, Raw Zone, and Curated Zone.

-

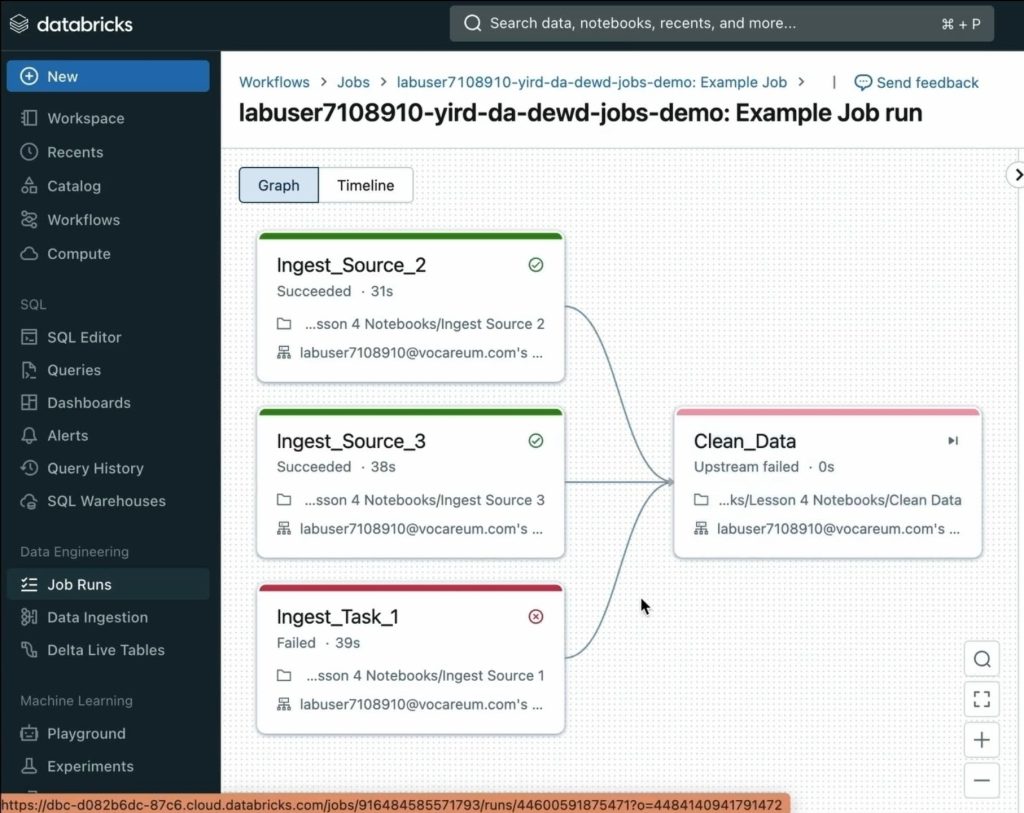

- Workflow Orchestration: The idea of orchestrating data pipelines is core to any modern data team. Databricks has its own robust workflows, but on Azure, this is handled by Azure Data Factory and its new Workflow Orchestration Manager, which is built on Apache Airflow. On AWS, you might use AWS Step Functions or AWS Glue.

-

- The Best Practices Are Universal: The most important lesson Databricks teaches is that these aren’t just features of one platform—they are enterprise grade best practices adopted by all major cloud data platforms. While the product names may change, the underlying principles are the same. For example:

-

- Embracing Enterprise Grade Best Practices: The vast majority of big data projects are born inside large enterprises, not small startups. These organizations have complex needs that go far beyond simple data loading—they require strict governance, security, and audibility. The best practices embedded in Databricks are a direct reflection of these enterprise demands. A small business might get by with a simpler setup, but learning Databricks teaches you the robust, scalable, and secure patterns that are non-negotiable in the real world. This is the difference between building a shed and an enterprise grade skyscraper.

-

- Cost Optimization through Efficient Querying (Partitioning and Clustering): This is where your wallet (and your on-prem hardware budget) will thank you. Databricks inherently guides you toward efficient data storage and querying.

-

- Partitioning: Organizing your data into logical segments (e.g., by date, region) drastically reduces the amount of data scanned for a query. Imagine searching for a book in a library where all the books are piled randomly versus one where they’re organized by genre and author. Partitioning is the latter.

-

- Clustering (Z-Ordering): This takes efficiency a step further by physically co-locating related data within partitions. For example, if you frequently filter by

product_idwithin a date partition, clustering byproduct_idensures that all records for a given product are stored close together, minimizing I/O.

- Clustering (Z-Ordering): This takes efficiency a step further by physically co-locating related data within partitions. For example, if you frequently filter by

-

- Why This Matters (On-Prem AND Cloud): This isn’t just about saving money in the cloud. Learning these optimization techniques on Databricks will make you a lean, mean, data processing machine even if you go on-prem. An unoptimized query on-prem will grind your expensive hardware to a halt, requiring you to throw more money at it. A data engineer who understands partitioning and clustering can make a smaller, more cost effective on-prem cluster perform wonders, maximizing your hardware investment. It teaches you to be resource conscious, a skill invaluable in any deployment model.

-

- These concepts are fundamental to columnar storage formats like Parquet and ORC, and you’ll apply them when designing your tables in Spark or other data processing frameworks. For the query engine itself, Trino is an excellent open source alternative. It’s a distributed SQL query engine designed for fast, federated querying across diverse data sources, making it a great choice for a DIY analytics stack.

-

- Cost Optimization through Efficient Querying (Partitioning and Clustering): This is where your wallet (and your on-prem hardware budget) will thank you. Databricks inherently guides you toward efficient data storage and querying.

-

- Managing External Tables: Databricks’ approach to external data management is a key best practice. An external table in Databricks registers a schema to data that lives in your cloud storage (e.g., S3 or ADLS). This separation of metadata (managed by Databricks) and data (managed by you) gives you flexibility. You can query data with Databricks while other tools or systems outside the platform still have direct access to the raw files.

-

- Version Control for Workflows: A key tenet of modern software engineering is version control, and data engineering is no different. Databricks makes this easy with Git integration for notebooks and code. This allows you to treat your data pipelines as code, committing changes, creating branches for new features, and merging your work back into a main branch. This practice ensures reproducibility, allows for collaboration, and provides a complete audit trail of every change made to a data pipeline.

-

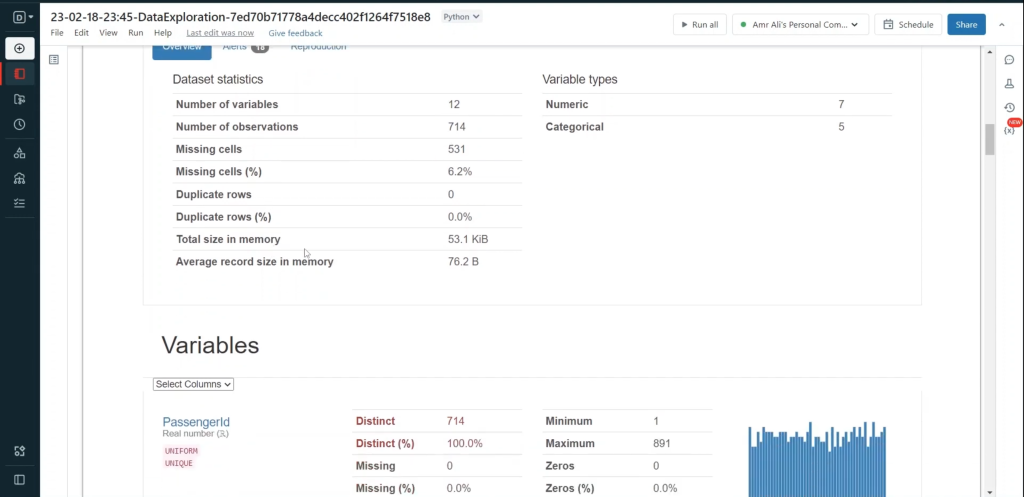

- Built-in Data Analytics Dashboards: Databricks SQL provides an environment for business users and analysts to directly query and visualize data. This built-in capability teaches engineers the importance of making their data products accessible and understandable to non-technical stakeholders. It forces you to think about not just the data pipeline, but the final consumption of the data, a critical part of the data product lifecycle.

-

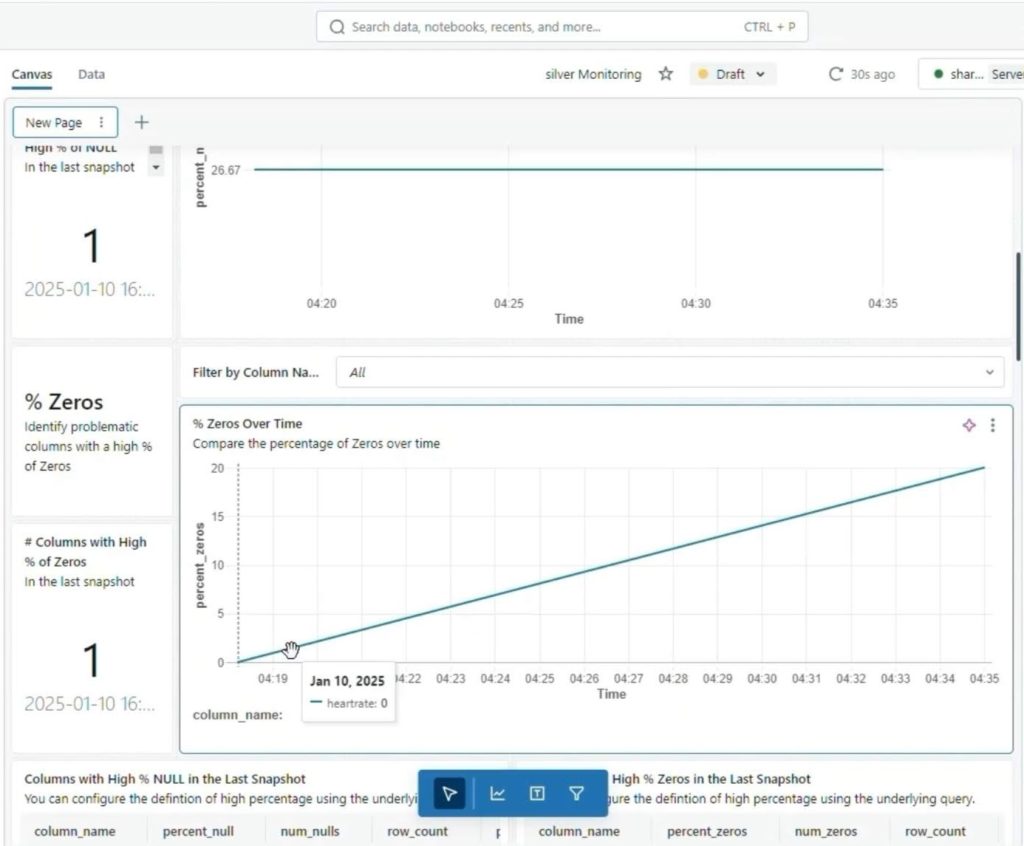

- Observability and Monitoring: What good is a data pipeline if you can’t see what’s happening inside it? Databricks provides a high degree of observability into your workflows and clusters, from realtime job metrics to detailed logs and historical performance data. This instills the habit of monitoring and troubleshooting from day one, ensuring your pipelines are not only functional but also reliable and easy to maintain.

-

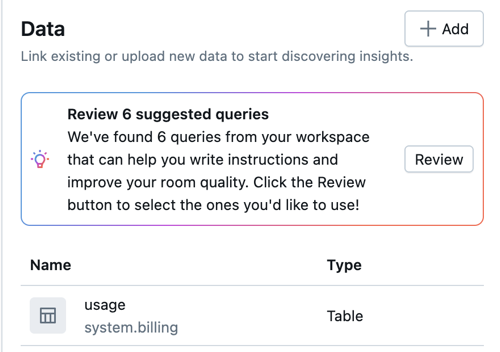

- AI-Powered Assistance (Databricks AI Chat Genie): Imagine having a knowledgeable mentor right there as you code. The Databricks AI Chat Genie accelerates your learning curve by guiding you in writing SQL queries and PySpark code. Stuck on a complex join or a tricky data transformation? The genie provides realtime suggestions and explanations, helping you master new syntax and best practices at an unprecedented pace. This immediate feedback loop is invaluable for rapid skill development, whether you’re a beginner or an experienced engineer tackling a new challenge. Not to mention the new AI/BI and “chat with your data” applications of Genie, now common to all major Data Engineering platforms, a tricky feature to implement on an on-prem stack.

-

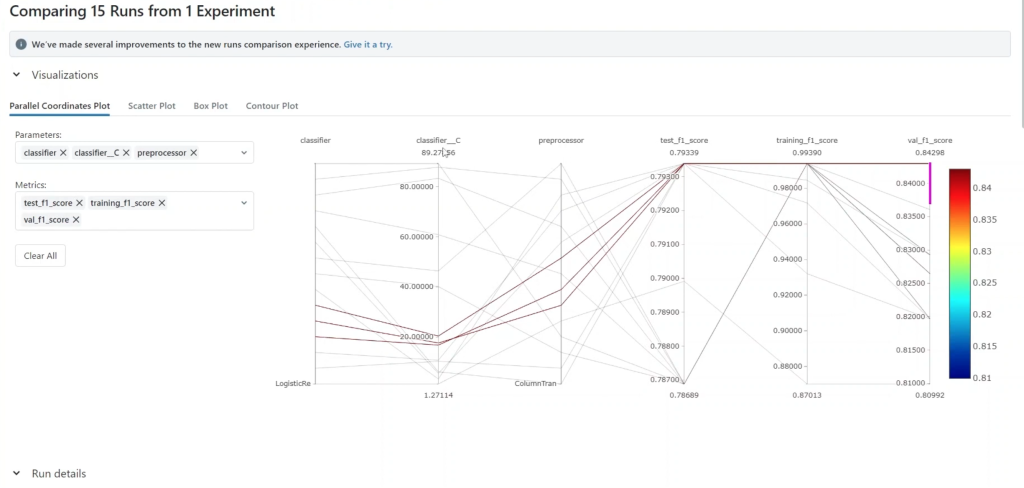

- Seamless ML Model Training with MLflow Integration: For data engineers looking to bridge the gap into machine learning operations (MLOps), Databricks provides an MLflow like interface for managing the entire ML lifecycle. This familiar environment allows you to track experiments, manage models, and deploy them with ease. Understanding how Databricks integrates data engineering with ML model training and deployment prepares you for productionizing AI solutions efficiently, a crucial skill in today’s data-driven world.

We Haven’t Even Scratched the Surface

What’s mentioned above is just the beginning. Databricks offers features that would take a DIY team months, if not years, to replicate and maintain. From serverless compute to a robust feature store, and from optimized Delta tables for faster queries to built-in tools for data sharing, the platform is a veritable Swiss Army knife of data engineering best practices. It embodies the pinnacle of modern data architecture.

The Power of Knowing Both Worlds

Ultimately, learning Databricks doesn’t mean you’re locked into it forever. Instead, it equips you with a profound understanding of the problems it solves and the best practices it embodies. You’ll gain:

-

- A “Why” for the “How”: You’ll understand why certain architectural choices are made, why data quality is critical, and why query optimization is essential, rather than just blindly implementing open-source components.

-

- Accelerated Learning Curve: The integrated nature of Databricks allows you to learn complex data engineering concepts much faster than painstakingly building everything from scratch. especially now that Databricks introduced a free version for experimentation and opened their learning resources for free.

-

- Informed Decision-Making: When you do decide to build your own stack, you’ll make informed choices about which open-source tools best replicate the robust, scalable, and governed environment you experienced with Databricks. You won’t just pick the popular tool; you’ll pick the right tool for the job.

So, don’t view Databricks as a corporate cage. See it as your high-performance training ground. Master its principles, understand its architecture, and internalize its best practices. By doing so, you’ll become a data engineer capable of building truly world-class solutions, whether they’re on Databricks or forged with your own hands from the finest open-source. Your data future (and your budget) will thank you.

Our Certified Data Engineers at Barkal Tech are here to help you in your data solutions exploration journey.

Recent Comments